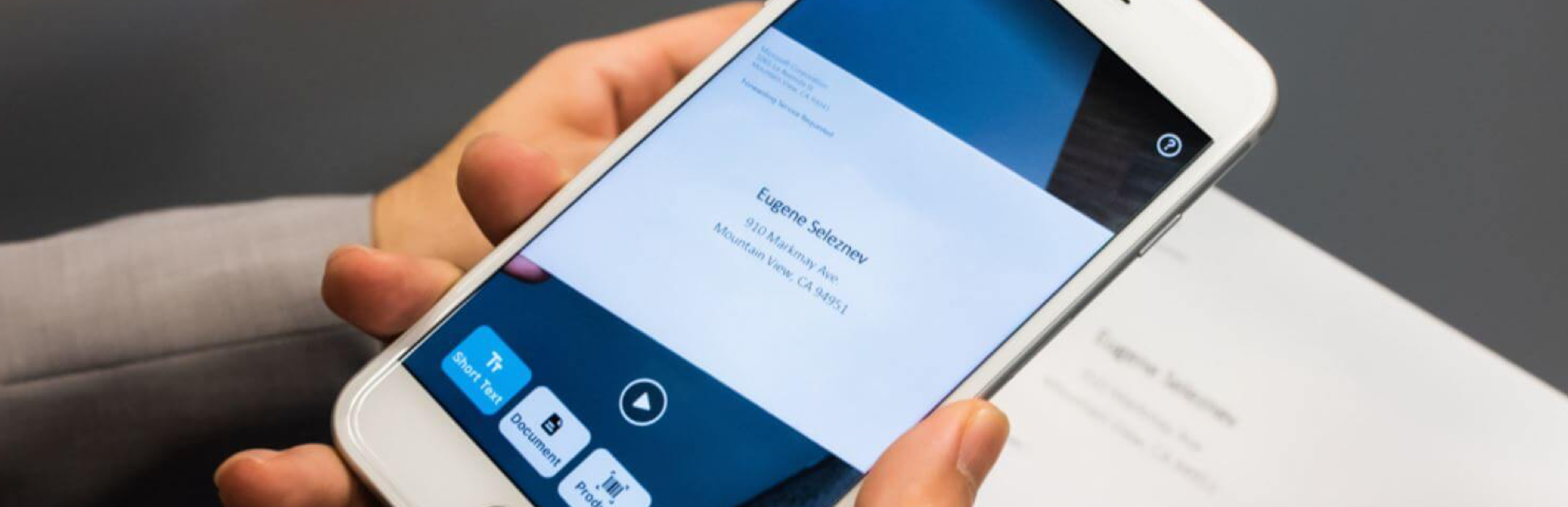

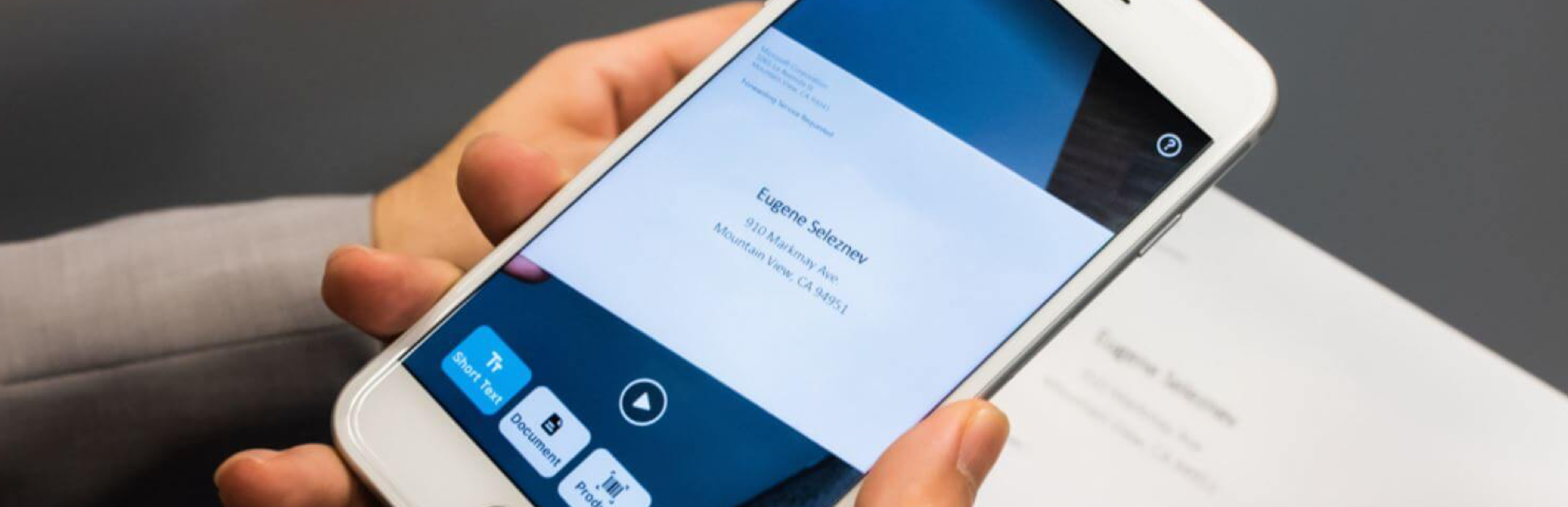

Seeing AI, 2017

Seeing AI is a mobile application that narrates the world around you. The app is designed for the low vision community by Machine Learning and AI to describe people, text, and objects.

Responsibility: UX, UI, User Research, Accessibity, Prototyping

Credits: Seeing AI Development Team, Foundry Incubation Lab, Microsoft Garage Team

Seeing AI App (iOS download)

Process

We interview more than 20 people with visual impairments and asked the mismatches they face on a daily bases. Many of the interviewees indicated the need to locate a chair or a door. In addition, Saqib Shaikh (project owner) agreed to the need for this experience.

AudioHaptics

The application uses audio and haptics to help the visually impaired community. For the example below, it helps people locate a chair in your space. If the chair is on your right you will get audio and haptic feedback from your right and if on the left the user will get the audio and haptic from the left. As the user gets close to the chair the audio will pulse faster until the person reaches the chair. (The black pulsing dot represents the audio and haptics)